Much like the original fall of Babylon two and a half millennia ago, the collapse of Babylon Health was an inevitable end to an untenable empire. It grew too fast, without enough regard for patient safety or the responsible development of AI.

But for years prior, a U.K. oncologist and an employee who had a short tenure at Babylon harbored deep misgivings about the company’s AI technology. The oncologist publicly aired his concerns, while the employee did not.

With the Rise of AI, What IP Disputes in Healthcare Are Likely to Emerge?

Munck Wilson Mandala Partner Greg Howison shared his perspective on some of the legal ramifications around AI, IP, connected devices and the data they generate, in response to emailed questions.

In interviews with MedCity News after Babylon’s demise, both Dr. David Watkins and Hugh Harvey said the that company had a long history of misrepresenting its technology — and that many employees knew the AI engine was a sham. Watkins is a consultant oncologist working within the U.K.’s National Health Service, and Harvey is a radiologist by training who had a year-long stint with Babylon.

Babylon, which was based in London, was founded in 2013. The company offered virtual primary care, including telehealth appointments and chatbot-style symptom checkers designed to triage patients. It went public in a special-purpose acquisition company (SPAC) merger in 2021, but its financial situation was tenuous in the years that followed.

Babylon shuttered its U.S. headquarters last month after its rescue merger fell apart. Earlier this month, the firm agreed to sell most of its assets to Miami-based digital health company eMed Healthcare via a bankruptcy process.

Both Harvey and Watkins have criticized Babylon’s AI for being “dangerously flawed,” and have raised concerns about the company’s history of purporting misleading claims about the accuracy of its technology. Because their concerns about Babylon’s legitimacy were so longstanding, they were both unsurprised to hear that the firm collapsed.

Ignoring and silencing doubts

Watkins has held the title of Babylon’s biggest critic since 2017. He first began publicly criticizing the company via Twitter (now X).

48yr old male 30/day smoker woken from sleep with chest pain on Saturday morning… The @babylonhealth app says get a GP appointment!!!! https://t.co/6a9W0ToXVK

— Dr Murphy (aka David Watkins) (@DrMurphy11) January 21, 2017

“When I first tried the Babylon chatbot back in 2017, it quickly became evident that the system was poorly developed with dangerous algorithmic flaws,” Watkins said in an interview recently. “The issues were flagged to the U.K. regulatory authorities. However, they were slow and ineffectual hence I went to the media with my concerns.”

From 2017 to 2020, Watkins posted dozens of screen recordings demonstrating the failings of Babylon’s chatbot. In several of these videos, Watkins was telling the chatbot about symptoms that required immediate medical attention (such as a heart attack or pulmonary embolism), and the AI was reassuring him that he could take care of the issue at home.

Although the flaws were readily evident, Babylon initially ignored Watkins’ tweets and denied the existence of safety concerns, he said. In the summer of 2018, Watkins said he even received an email from a journalist saying that Babylon’s medical director — Dr. Mobasher Butt — told them that his videos had been edited to make the chatbot look bad.

Although Watkins had originally raised concerns directly with U.K. regulatory authorities in 2017, he had remained anonymous on Twitter until February 2020, when he publicly faced Babylon in a contentious debate at the Royal Society of Medicine in London. After Watkins expressed his concerns, Babylon responded in a press release that called him a “Twitter troll.” The press release denied that Babylon’s chatbot made significant errors when triaging patients and claimed that any issues were fixed immediately. However, Watkins remained unconvinced, saying that dangerous flaws were readily identified between 2017 and 2020 and that the company had a history of making false claims by this time.

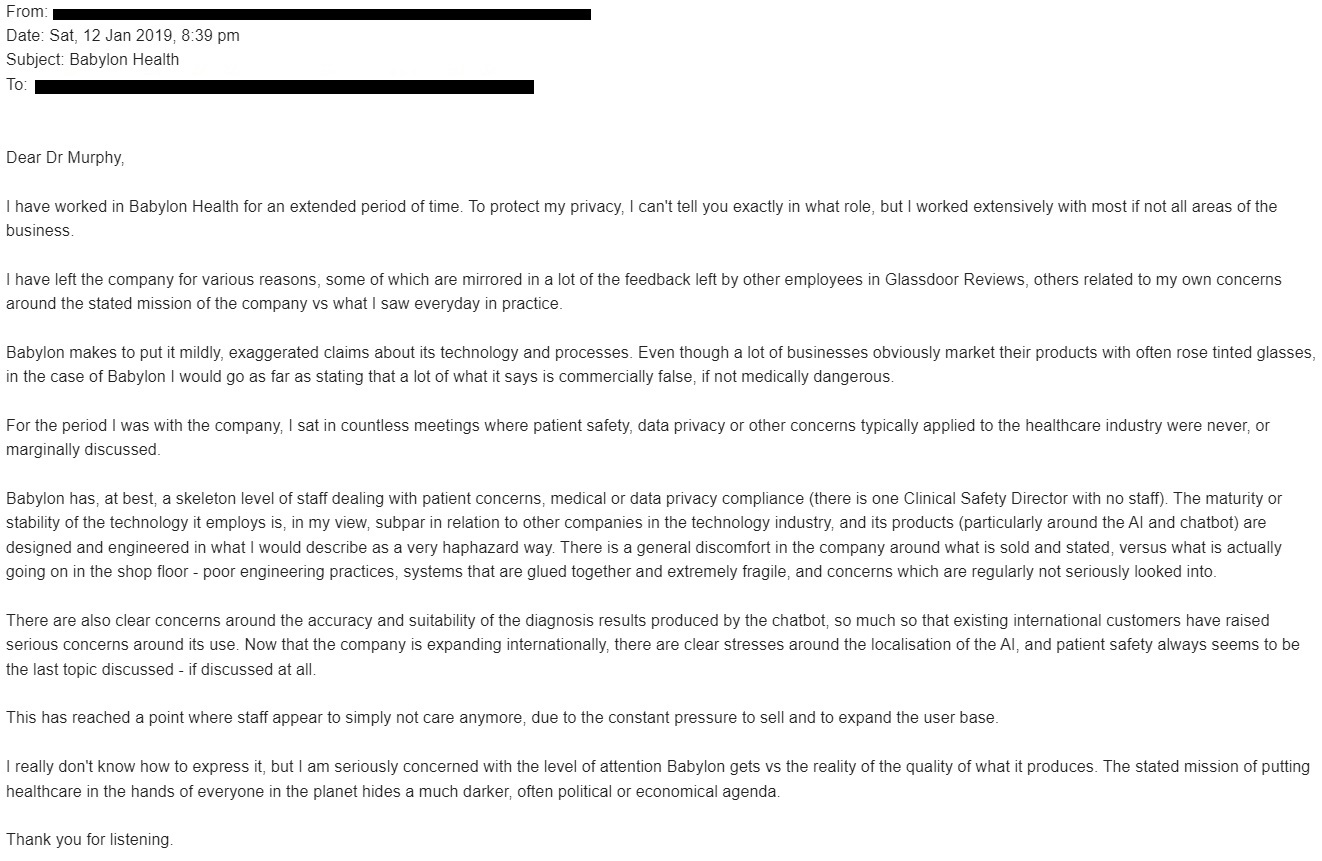

MedCity News reviewed some anonymous emails that Watkins received from former Babylon employees that show that he was not alone in thinking the technology had major drawbacks and could harm patients.

The employee refers to Watkins as “Dr Murphy” because “Murphy Munger” is his Twitter alias and the name he used for an email account dedicated to correspondence about Babylon.

Another email Watkins received from a former employee asserted that Babylon’s AI chatbot was “not AI at all.”

“The Probabilistic Graphical Model (PGM) that the AI Chatbot is based on is definitely NOT built on ANY good quality data. Most of data points are very poorly derived from the subjective input by local doctors. The deviation is so extreme that no reliable statistical model can be applied to it,” the email read.

MedCity News’ calls and emails to Babylon’s media relations team were not returned.

Watkins declared that that Babylon’s story of poorly functioning technology and dishonestly echoed another well-known, now-shuttered digital health company —the infamous blood diagnostics company Theranos, run by now-convicted Elizabeth Holmes. Like Theranos, Babylon had its employees sign non-disclosure agreements, and these helped Babylon maintain its grift, Watkins said.

“Staff feared legal action being taken against them. If it weren’t for the NDAs, Babylon staff would have openly discussed the unethical corporate behavior back in 2018,” he charged.

The view from the inside

The day after the company went under, Hugh Harvey — who is now a managing director at U.K.-based digital health consultancy Hardian Health — posted a lengthy thread on Twitter detailing what he experienced during his year-long tenure at Babylon. He joined the firm in late 2016 as a clinical AI researcher, he said in a recent interview.

When Harvey was applying for his position at Babylon, he downloaded the company’s app, which he said was being sold to the public as “an AI-driven triaging system for patients to use.” When it came time for his job interview, Babylon’s leadership revealed to him that the company’s AI engine was in an unfinished, iterative phase.

“But when I started, it was actually just decision trees within spreadsheets,” Harvey recalled. “But that said, they were very quickly starting to build what they called their probabilistic graph model. I guess if you think of it in terms of tech production, it was a minimally viable product. But it didn’t have artificial intelligence in it at the time that I started.”

During his time at Babylon, the company poured millions into its AI workforce, he said. These AI teams were tasked with adding natural language processing and Bayesian mathematics to the firm’s technology, as well as with curating a medical ontology, he added.

This slapdash effort to create legitimate, accurate AI was unsuccessful, Harvey declared. He gave Babylon some credit, though, by saying its natural language processing was slightly usable — for example, “if someone wanted to ask about a nasal problem, it could recognize they were talking about their nose, but that was about it,” he explained.

In his one-year tenure at Babylon, Harvey recalled another particularly problematic incident — a BBC filming in which the company’s AI engine was “jury-rigged to look more accurate” than it actually was.

“BBC was invited to come in and film, but there was no user interface-ready system. It was pretty ropy, and it wasn’t always accurate. It would freeze a lot — all typical stuff you get with early-stage tech. And the data scientists and the team literally slept in the office for a few nights in a row trying to get this thing over the line. They created this big, flashy-looking thing which appeared in the news documentary, but it was nothing like the actual real product that we had — it was made solely for that BBC filming session,” Harvey said.

He left the company in late 2017, feeling “frustrated, disillusioned and wanting to do health tech properly.”

Fake it until you make it

Shortly after his departure, Babylon was misrepresenting the capabilities of its AI technology in the public eye once again, according to Harvey. To publicize the accuracy of its AI system, the firm set-up a promotional event where

it pitted its system against the Royal College of General Practitioners exam used to assess trainee doctors. Babylon conducted this test itself rather than turning to an independent body, and Harvey claims that the company cherry-picked the questions included in the test.

“It’s a bit silly on very many levels. It’s the wrong test to test this AI — testing medical knowledge is very different from testing medical skill in practice. No one really believed the results because it wasn’t independently verified in any way, shape or form. And the claims were overblown — in a medical exam, you’re not always making a diagnosis. You’re maybe just answering questions about what the best treatment options are or what the best empathetic care might look like, rather than diagnosis,” he declared.

In a live-streamed public relations event held at the Royal College of Physicians in London in June 2018, Babylon announced that its AI scored 81% on the exam, surpassing the average score of 72% for U.K. doctors. Babylon’s public relations agency claimed the event resulted in 111 articles reaching a global audience of over 200 million, according to Watkins.

However, a few months later, the Royal College of Physicians distanced itself from Babylon, and Babylon removed all online content referencing this test of its AI, Harvey explained.

He said he isn’t sure exactly what happened behind the scenes, but he thinks Babylon’s taking down if its validation announcement likely stemmed from the Royal College of Physicians and other medical organizations questioning the legitimacy of the company’s claims. The Royal College of Physicians did not respond to MedCity News’ inquiry to verify Harvey’s hunch.

Watkins believes that the U.K.’s Medicines and Healthcare Products Regulatory Agency told Babylon to remove the promotional material because the company made false and misleading claims regarding the capabilities of its AI system by stating it could be used for diagnosis. The U.K. regulators should have taken a much stronger stance with Babylon in 2018 and informed the public of the misleading claims, he asserted.

Meanwhile, Babylon’s customers, which included health plans and employers, were also quite skeptical about the company’s AI, Harvey pointed out.

“I wasn’t present at early business negotiations or deal-making negotiations, but you’d hear that Babylon promised X or Y functionality to this customer — functionalities we’ve never had on our roadmap or we hadn’t built yet. I always wondered ‘How are they selling stuff that we haven’t built yet to these customers?’ My sense is that they tried to leverage the power of AI by saying, ‘Look, we can build you whatever you want, because we’ve got the know-how to build it,” he said.

But Babylon’s technical teams couldn’t deliver on the lofty promises the company had made. Therefore, most of Babylon’s contracts ended up falling through, Harvey declared.

“It felt like the business part of the company was almost separate. They were talking about different products than what we were actually building,” he added.

Babylon representatives did not respond to MedCity News’ inquiries regarding Harvey’s claims. Harvey noted that he does not consider himself a disgruntled ex-employee. He is speaking out now because the company has collapsed, and he wants to offer his insight about why the company shut down.

“I’ll say that there were a lot of very good-willed, intelligent and hardworking people who worked at that company. It’s a shame that the leadership oversold it and pushed it too hard and too fast,” he explained.

Watkins has a less charitable view.

“Babylon knowingly released a dangerously flawed medical device and put the public at risk of harm. Babylon made false claims with regard to the capability of their AI technology. And when challenged regarding safety concerns, Babylon lied,” he said.

He added that what Babylon did “went well beyond the bounds” of AI hype.

“[Babylon founder and CEO Ali Parsa] should spend some time with Elizabeth Holmes,” Watkins quipped, referring to Theranos’ disgraced CEO who is incarcerated and not due to be released until 2032.

Parsa didn’t respond to MedCity News’ LinkedIn direct message for comment.

Photo: Feodora Chiosea, Getty Images