Healthcare organizations are using AI more than ever before, but plenty of questions remain when it comes to ensuring the safe, responsible use of these models. Industry leaders are still working to figure out how to best address concerns about algorithmic bias, as well as liability if an AI recommendation ends up being wrong.

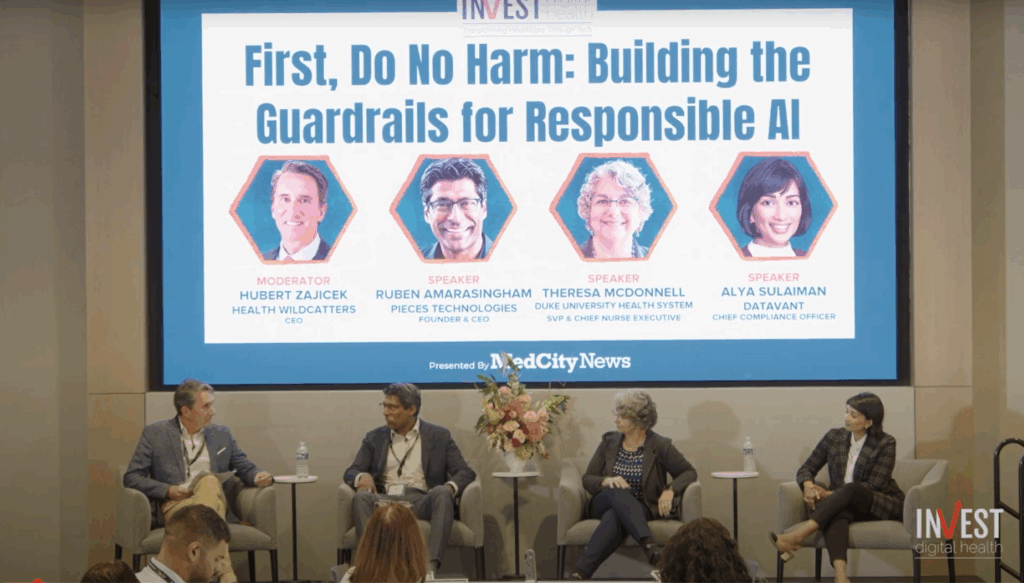

During a panel discussion last month at MedCity News’ INVEST Digital Health conference in Dallas, healthcare leaders discussed how they are approaching governance frameworks to mitigate bias and unintended harm. They think that the key pieces are vendor responsibility, better regulatory compliance and clinician engagement.

Ruben Amarasingham — CEO of Pieces Technologies a healthcare AI startup acquired by Smarter Technologies last week — noted that while human-in-the-loop systems can help curb bias in AI, one of the most insidious risks is automation bias, which refers to people’s tendency to overtrust machine-generated recommendations.

“One of the biggest examples in the commercial consumer industry is GPS maps. Once those were introduced, when you study cognitive performance, people would lose spatial knowledge and spatial memory in cities that they’re not familiar with — just by relying on GPS systems. And we’re starting to see some of those things with AI in healthcare,” Amarasingham explained.

Automation bias can lead to “de-skilling,” or the gradual erosion of clinicians’ human expertise, he added. He pointed to research from Poland that was published in August showing that gastroenterologists using AI tools became less skilled at identifying polyps.

Amarasingham believes that vendors have a responsibility to monitor for automation bias by analyzing their users’ behavior.

“One of the things that we’re doing with our clients is to look at the acceptance rate of the recommendations. Are there patterns that suggest that there’s not really any thought going into the acceptance of the AI recommendation? Even though we might want to see a 100% acceptance rate, that’s probably not ideal — that suggests that there isn’t the quality of thought there,” he declared.

Alya Sulaiman, chief compliance and privacy officer at health data platform Datavant, agreed with Amarasingham, saying that there are legitimate reasons to be concerned that healthcare personnel could blindly trust AI recommendations or use systems that effectively operate on autopilot. She noted that this has led to numerous state laws imposing regulatory and governance requirements for AI, including notice, consent and strong risk assessment programs.

Sulaiman recommended that healthcare organizations clearly define what success looks like for an AI tool, how it could fail, and who could be harmed — which can be a deceptively difficult task because stakeholders often have different perspectives.

“One thing that I think we will continue to see as both the federal and the state landscape evolves on this front, is a shift towards use case-specific regulation and rulemaking — because there’s a general recognition that a one-size-fits-all approach is not going to work,” she stated.

For instance, we might be better off if mental health chatbots, utilization management tools and clinical decision support models all had their own set of unique government principles, Sulaiman explained.

She also highlighted that even administrative AI tools can create harm if errors occur. For example, if an AI system misrouted medical records, it could send a patient’s sensitive information to the wrong recipient, and if an AI model incorrectly processed a patient’s insurance data, it could lead to delays in care or billing mistakes.

While clinical AI use cases often get the most attention, Sulaiman stressed that healthcare organizations should also develop governance frameworks for administrative AI tools — which are rapidly evolving in a regulatory vacuum.

Beyond regulatory and vendor responsibilities, human factors — like education, trust building and collaborative governance — are critical to ensuring AI is deployed responsibly, said Theresa McDonnell, Duke University Health System’s chief nurse executive.

“The way we tend to bring patients and staff along is through education and being transparent. If people have questions, if they’ve got concerns, it takes time. You have to pause. You have to make sure that people are really well informed, and at a time when we’re going so fast, that puts additional stressors and burdens on the system — but it’s time well worth taking,” McDonnell remarked.

All panelists agreed that oversight, transparency and engagement are crucial to safe AI adoption.

Photo: MedCity News