Healthcare has been investing in imaging for decades. Long before artificial intelligence entered every boardroom conversation, hospitals were buying scanners, digitizing films, and upgrading radiology departments to improve speed and accuracy. The Picture Archiving and Communication System (PACS) replaced film, reduced turnaround times, and made specialist interpretation easier to access. Digital pathology is now following a similar path bringing slides, scanners, and algorithms into routine clinical use.

None of this is new. What is new is the scale and role imaging now plays inside healthcare organizations. Radiology and digital pathology together generate some of the largest and most complex datasets in medicine carrying longitudinal signal, biological detail, and operational context that most other clinical data simply does not.

Imaging is no longer just a diagnostic output. It has become a core input into how health systems understand disease, evaluate outcomes, plan capacity, and increasingly, how they learn. In practice, imaging is starting to behave like a shared enterprise intelligence layer, even though most organizations have not designed it that way.

The Power of One: Redefining Healthcare with an AI-Driven Unified Platform

In a landscape where complexity has long been the norm, the power of one lies not just in unification, but in intelligence and automation.

That mismatch is now showing.

Where radiology systems hit their limit

Radiology’s early digitization success created a model that worked extremely well for its original purpose. A scan was ordered, a radiologist interpreted it, a report was delivered. The case moved on. That episodic flow shaped the underlying architecture.

Most imaging infrastructure was built to optimize access and interpretation, not intelligence. PACS, VNAs, and many newer platforms excel at storing images and routing them to the right specialist. But they did not fundamentally change how imaging data was used. Over time, this created a ceiling. Imaging data could move faster, but it could not be recombined easily, analyzed across time, or linked reliably to downstream outcomes.

Radiology answered the question: “What does this scan show right now?” It was never designed to answer questions that matter in value-based care and precision medicine: Why is this disease progressing? How do similar patients respond to treatment? What patterns are emerging across the population?

As healthcare shifted toward longitudinal care models and AI-driven decision support, these data liquidity limitations became harder to ignore. If imaging data cannot be accessed, governed, normalized, and analyzed across the organization, it cannot support the kind of intelligence leaders now expect.

Digital pathology raises the stakes

Just as many organizations were coming to terms with the limits of radiology-centric systems, digital pathology dramatically increased the complexity of the problem.

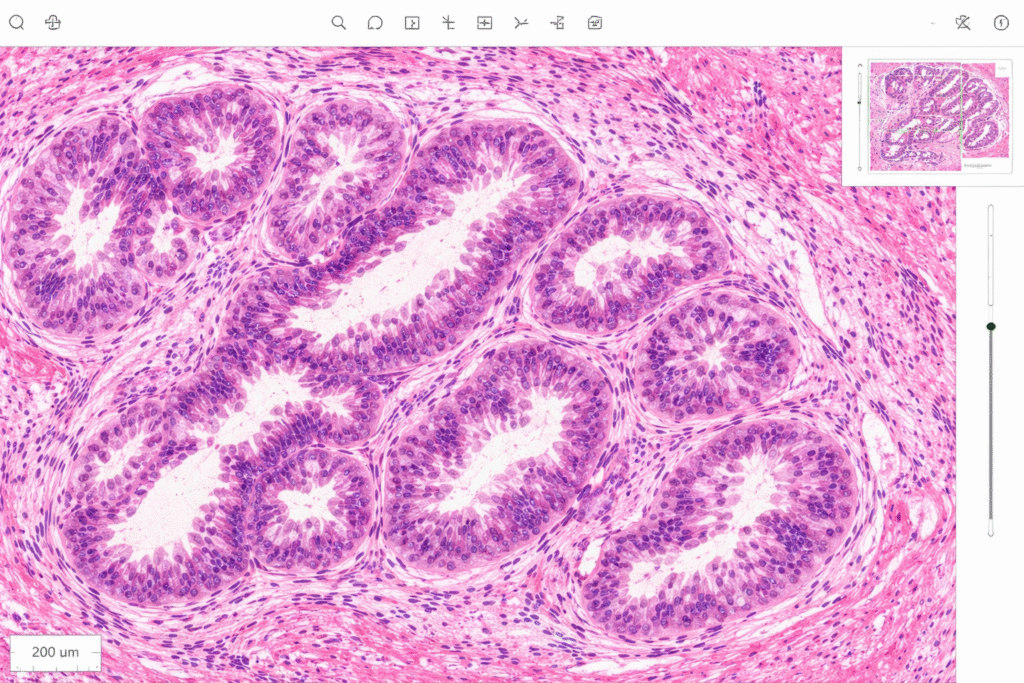

A single scanned slide can generate gigabytes of data. Multiply that across cases, stains, and years, and pathology quickly becomes one of the largest data producers in the enterprise. Unlike radiology, pathology operates at cellular and molecular resolution, capturing information that is essential for understanding disease biology.

This is why pathology has become such fertile ground for machine learning. Algorithms are already showing strong results in cancer detection, grading, and biomarker identification. More importantly, pathology provides the biological context needed to link imaging findings to mechanisms and therapeutic response.

But digital pathology also forces an uncomfortable realization. Many deployments remain tightly bound to LIS-first workflows, proprietary formats, and slide-level silos. In effect, pathology risks repeating the same pattern radiology followed: digitally advanced, operationally useful, but strategically constrained.

More fundamentally, digital pathology breaks the assumptions behind modality-centric, DICOM-era architectures. It exposes the fact that imaging data can no longer be managed one specialty at a time. Governance, normalization, and computation have to happen across domains, not within them.

How multimodal intelligence actually emerges

The most valuable insights in medicine do not come from a single data source. Radiology captures structure and function. Pathology reveals cellular and molecular detail. Clinical records describe phenotype, treatment, and outcomes. Genomics adds risk and susceptibility. Artificial intelligence adds value by connecting these signals.

When these data sources can be analyzed together, healthcare begins to move beyond episodic diagnosis to precision medicine – disease can be understood over time, treatment response can be predicted with greater confidence and rooted in real world data. Large initiatives like the UK Biobank and Cancer Imaging Archive demonstrate this clearly. However, their success is not driven by superior algorithms alone. It comes from data that was deliberately designed to move, connect, and be reused across studies and populations.

This is the point many organizations underestimate: multimodal intelligence does not emerge because models get better. It emerges when data is liquid enough to support learning across systems, specialties, and time.

Why data liquidity is no longer just an enterprise issue

The implications extend beyond individual health systems.

The Covid-19 pandemic made the cost of fragmented imaging and pathology data painfully obvious. Despite rapid advances in analytics, generating population-level insight proved slow and difficult when data was locked inside institutional boundaries.

The creation of ARPA-H reflects a growing need for a national medical discovery infrastructure. There is recognition that biomedical progress is increasingly constrained by infrastructure rather than ideas. National initiatives around digital twins, bio surveillance, pandemic preparedness, and real-world evidence all depend on the ability to access and compute across imaging data at scale.

For healthcare executives, this is a strategic signal. Organizations that enable imaging data to move across the enterprise and beyond it position themselves to participate meaningfully in large-scale research and public health collaboration. Those that do not increasingly find themselves supplying data without shaping how it is used.

Liquidity as a force multiplier

When imaging data becomes liquid, the benefits compound.

- Clinically, imaging insights persist across care pathways instead of living inside isolated workflows. Diagnosis becomes faster. Treatment selection becomes more consistent. Variation becomes easier to manage.

- Operationally, imaging data begins to inform system-level decisions. Capacity planning, scheduling, and command-center operations improve when imaging signals are visible across service lines rather than trapped in departmental systems.

- Financially, liquid imaging data supports new forms of collaboration with life sciences companies, research institutions, and technology partners. Real-world evidence and translational research stop being side projects and start becoming part of enterprise strategy.

Why most health systems are still behind

Despite all of this, most organizations remain structurally unprepared. Imaging data is often treated as departmental property rather than enterprise intellectual capital. Governance is fragmented. Vendor contracts restrict portability. AI initiatives move forward independently of imaging strategy. Legal, compliance, and research teams rarely operate from a shared data agenda.

The result is familiar. Health systems become data-rich and intelligence-poor. They accumulate vast imaging archives without the ability to reuse them for sustained learning or competitive advantage.

Addressing this is not about launching another project. It requires a shift in posture. Imaging and pathology data need to be treated as core enterprise infrastructure and strategic IP. Architectures must be modality-agnostic. Governance must span IT, imaging, research, legal, and AI functions.

Federated learning models, which allow intelligence to be derived across distributed datasets without centralizing sensitive data, will increasingly determine who can participate in regional and national research ecosystems.

Above all, leadership clarity matters. Imaging data liquidity is not an operational upgrade. It is a long-term strategic decision.

In the coming decade, healthcare advantage will not be defined by how many scanners are installed or how many AI pilots are launched. It will be defined by which organizations allow imaging data to move, connect, and compound learning across the enterprise – and use that liquidity to build true multimodal medical intelligence.

Photo: ChatGPT

John Memarian, VP, MedTech Medical Imaging & Informatics, CitiusTech, has over 25 years of clinical and technical experiences with extensive hands-on experiences in both invasive and non-invasive adult and pediatric imaging and surgical procedures. He has held various healthcare executive roles in the provider sector. John has consulting and business development experiences in bioscience, IT Solutions, cloud technologies, enterprise imaging, digital pathology and clinical genomes. He played a leading role in launching new innovative technologies - Thromboelastography (TEG), Vendor Neutral Archive (VNA), DICOMized digital pathology and POC clinical genomics in US and international markets.

This post appears through the MedCity Influencers program. Anyone can publish their perspective on business and innovation in healthcare on MedCity News through MedCity Influencers. Click here to find out how.